Artificial Intelligence (AI) has come a long way in the last decade. From chatbots to coding assistants, today’s large language models (LLMs) like GPT or Claude can perform an incredible range of tasks. They write essays, generate business plans, translate languages, and even simulate conversations that feel surprisingly human. But beneath the surface, these systems share a frustrating limitation: they don’t really learn. Once deployed, an LLM doesn’t naturally evolve with new information. Instead, it starts fresh every time you prompt it, drawing only from the data it was trained on. If you want the system to improve, you usually need to retrain or fine-tune the entire model that’s expensive, slow, and hard to maintain.

Now, a groundbreaking framework from Stanford University, titled Agentic Context Engineering (ACE), could change that forever. ACE reimagines how AI systems grow and adapt, which paves the way for self-improving, context-aware AI that learns from experience instead of retraining.

What Is Agentic Context Engineering?

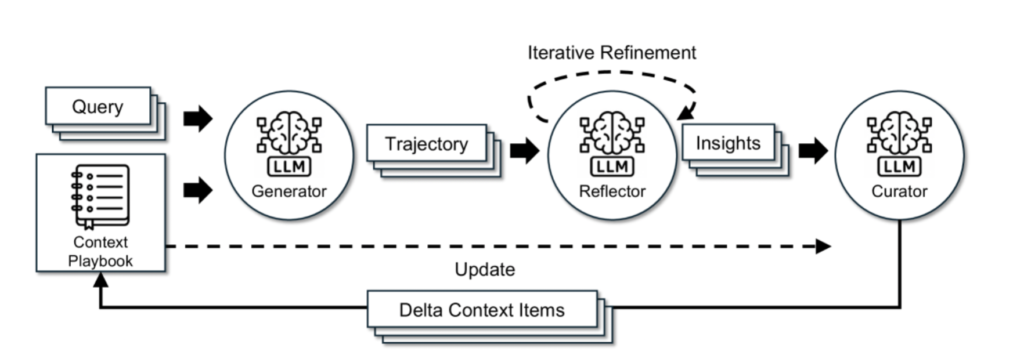

Agentic Context Engineering (ACE) is a research framework designed to make large language models self-improving through context, not by altering their internal weights.

Instead of retraining or fine-tuning a model’s neural parameters, the newly researched framework teaches AI to evolve its understanding by refining the context it uses. You can think of it as giving an AI a living playbook, which is a structured, evolving memory that grows with experience. Over time, this playbook becomes richer, more intelligent, and better aligned with the tasks the AI performs.

How It Works: The AI That Reflects, Learns, and Refines

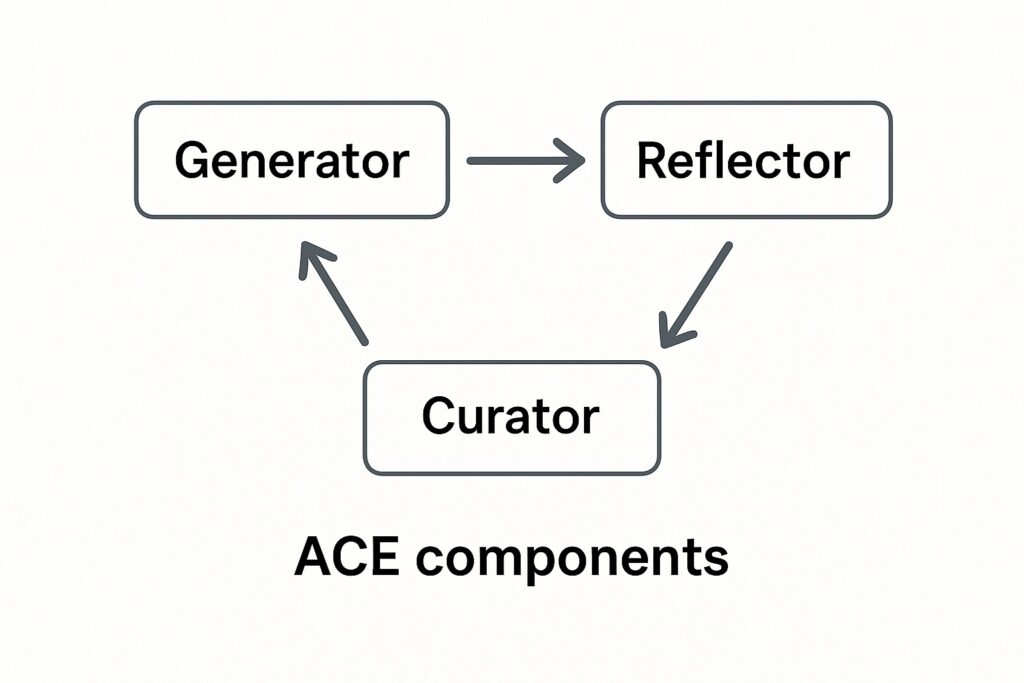

ACE breaks the learning process into three specialized components:

- Generator – Performs the task and produces reasoning traces or outputs.

- Reflector – Reviews outcomes, identifies mistakes, and extracts lessons.

- Curator – Updates the AI’s evolving playbook, preserving useful insights and pruning redundant ones.

This architecture allows the model to learn and improve continuously without forgetting prior knowledge. Rather than rewriting everything, ACE performs incremental context updates including small, precise refinements that accumulate over time.

The result is an AI that can remember what worked, recognize what failed, and intelligently adapt future decisions.

Example: How ACE Differs from Today’s Prompt-Based LLMs

To understand why ACE matters, consider how most LLMs work today.

Current Prompt-Based LLMs

When you use a model like GPT-4, Claude, or Gemini, it operates entirely on static context i.e. the text you give it at that moment. It doesn’t retain experience from past sessions. Each interaction is like teaching a student something new, only for them to forget everything once the conversation ends.

If you want the model to improve, developers must retrain it or design better prompts manually. This is called prompt engineering.

ACE-Enabled LLMs

Instead of rewriting the entire prompt every time, ACE adds incremental updates. Over time, the context becomes richer, smarter, and more reliable without collapsing into vague summaries.

For example:

- Suppose an ACE agent writes a Python script but makes an error in handling file paths.

- The Reflector identifies this failure and extracts a lesson: “When working with user directories, always use os.path.expanduser().”

- The Curator then adds this insight to the playbook.

- Next time the agent writes code, it automatically applies that correction without being told.

With ACE, AI agents stop being static responders and start acting like adaptive collaborators. They grow smarter by refining their contexts, not by retraining their entire neural network.

The Problems ACE Solves

Imagine you’re writing a novel. Each time you start a new chapter, your editor deletes half the previous one. Over time, the storyline gets vague, the details vanish, and the plot collapses. That’s what happens inside LLMs today.

Brevity Bias

As prompts evolve, the model tends to compress and oversimplify them. What started as a rich instruction becomes a one-liner. Critical details vanish, and the output loses depth. This is brevity bias – a tendency to strip complexity until what remains is too shallow to be useful.

Context Collapse

Another issue is context collapse. When multiple instructions, histories, or notes pile up, the model overwrites them or forgets crucial elements. It’s like stacking sticky notes until they slide off and leave a blank page. For businesses using AI to automate workflows or customer support, this means that lessons learned one day are gone the next.

How ACE overpowers these issues

But, ACE eliminates both issues by preserving and enriching knowledge. Each update adds valuable context instead of overwriting it, ensuring the AI becomes more knowledgeable, not less.

Why ACE Is a Game-Changer

ACE represents a shift from static intelligence to dynamic adaptation, which enables AI systems/models to grow smarter through use.

Key Advantages

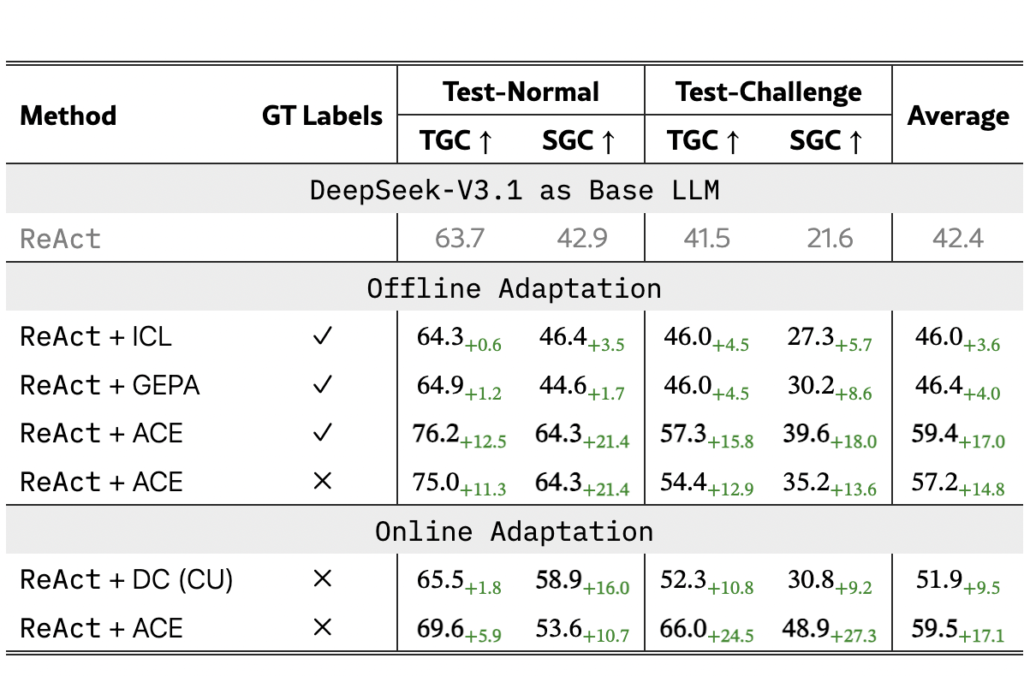

- Self-improvement without retraining: The model learns naturally from task feedback, using success or error signals as lessons.

- Lower cost, higher efficiency: ACE reduces adaptation latency by over 80% and achieves higher accuracy with fewer computational resources.

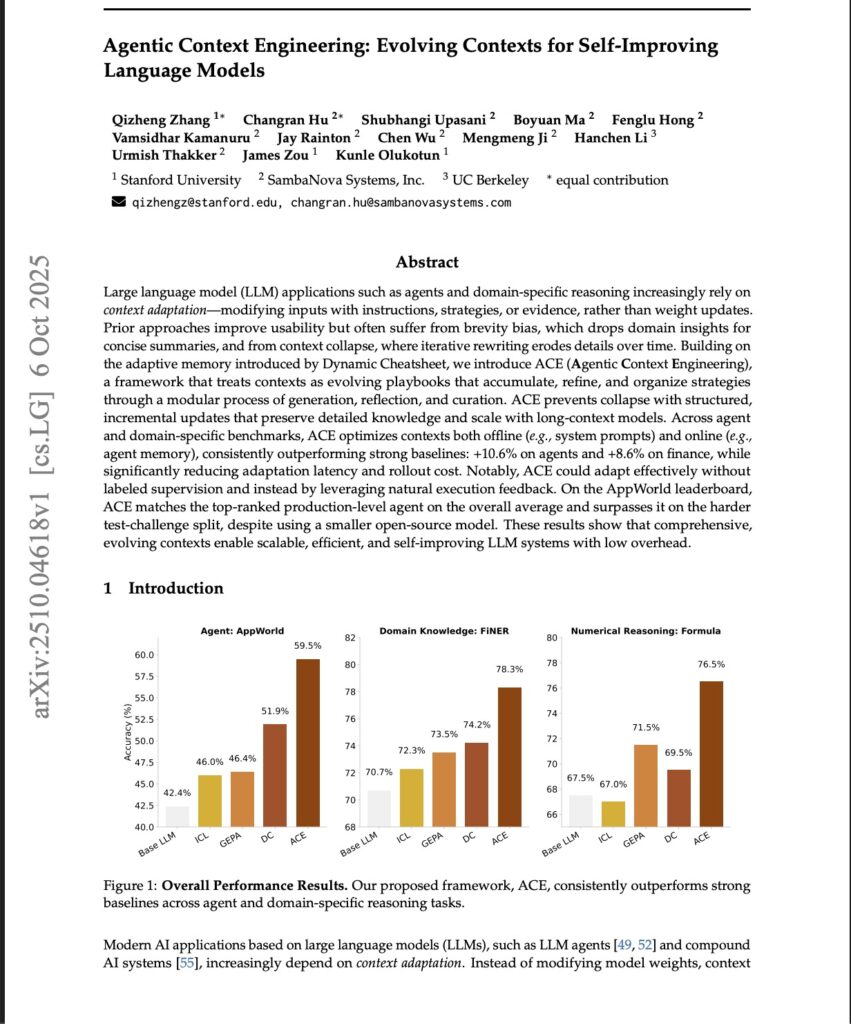

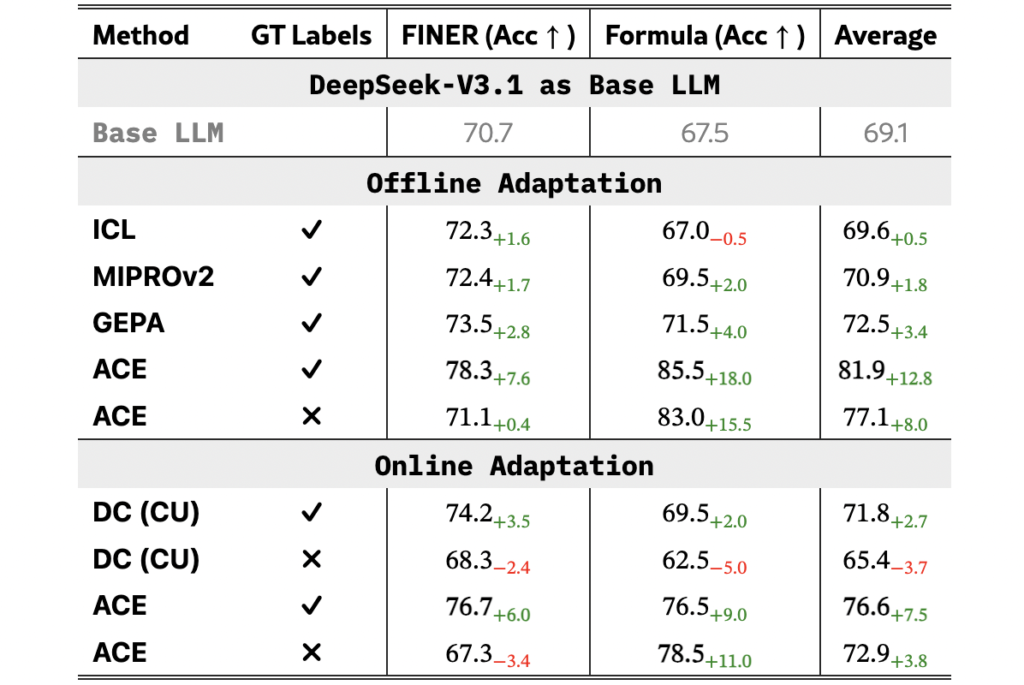

- Performance leaps: In Stanford’s experiments, ACE improved results by 10.6% on agent benchmarks and 8.6% on financial reasoning tasks, rivaling GPT-4-level performance while using smaller, open-source models.

- Transparency and safety: Because its learning is stored as human-readable entries (“bullets” in the playbook), ACE makes model evolution auditable and controllable.

From Prompt-Engineered to Agentic AI

| Feature | Prompt-Engineered LLMs | ACE-Enabled Agentic LLMs |

|---|---|---|

| Learning type | Static | Continuous and reflective |

| Context | Temporary (session-based) | Persistent and evolving |

| Improvement method | Manual prompt tuning | Automatic context refinement |

| Cost of improvement | High (retraining/fine-tuning) | Low (incremental updates) |

| Transparency | Limited | Fully interpretable |

ACE effectively transforms language models from reactive tools into adaptive agents.

Whereas prompt-engineered models depend entirely on user instructions, ACE models evolve their understanding autonomously, bridging the gap between today’s intelligent systems and tomorrow’s self-learning AI.

The Future with ACE

Imagine a future where:

- Your AI assistant remembers your preferences, adapts to your style, and improves every day.

- AI systems in healthcare or finance continuously refine their reasoning from real-world feedback.

- Enterprises no longer need expensive model retraining cycles. ACE assists AI systems to learn through experience.

That is the promise of ACE – a self-evolving artificial intelligence built on continuous, interpretable context growth.

By separating knowledge evolution from model retraining, ACE could make AI development faster, safer, and far more sustainable.

The Dawn of Self-Learning AI

If the past decade of AI was defined by training larger models, the next may be defined by teaching models how to learn on their own.

Agentic Context Engineering doesn’t just make AI smarter. Actually, it changes what “learning” means in the age of large language models.

The question is no longer “Can AI learn?” It’s “How soon will it start learning by itself?”